In June last year, Facebook open sourced the advanced simulation platform AI Habitat, which aims to provide general human simulation training for physical AI research, but at that time this AI Habitat only provided visual functions.

Therefore, Facebook recently created a brand new SoundSpaces with AI Habitat as the core. It is known as the world’s first AI platform with both visual and auditory senses, which greatly improves the robot’s ability to recognize and understand the environment.

Facebook stated in its blog that vision is the basis of perception, while sound is equally important. It can capture a wealth of information that is usually imperceptible through vision or data. But few systems and algorithms use sound to build tools for physical understanding, which is why Facebook released SoundSpaces.

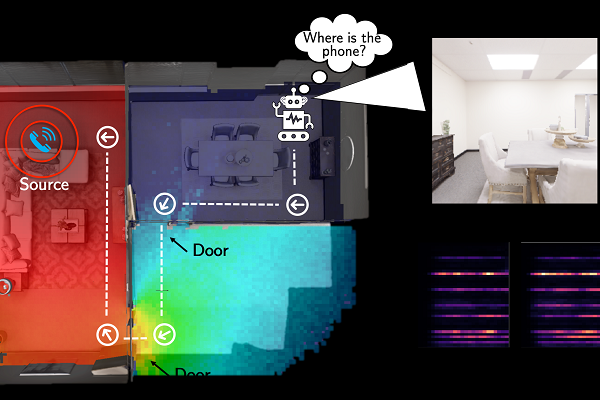

SoundSpaces is an audio rendering library based on 3D environmental acoustic simulation. The data set is designed to be used in conjunction with Facebook’s open source simulation platform AI Habitat. The provided sound sensor can insert any sound collected from the real environment from the data set, and then use the AudioGoal task to mine the objects that emit sound in the physical environment.

Today’s robots combined with SoundSpaces can determine the position of a mobile phone that is making sounds, and this ability can help people complete various complex tasks in the physical world in the future.